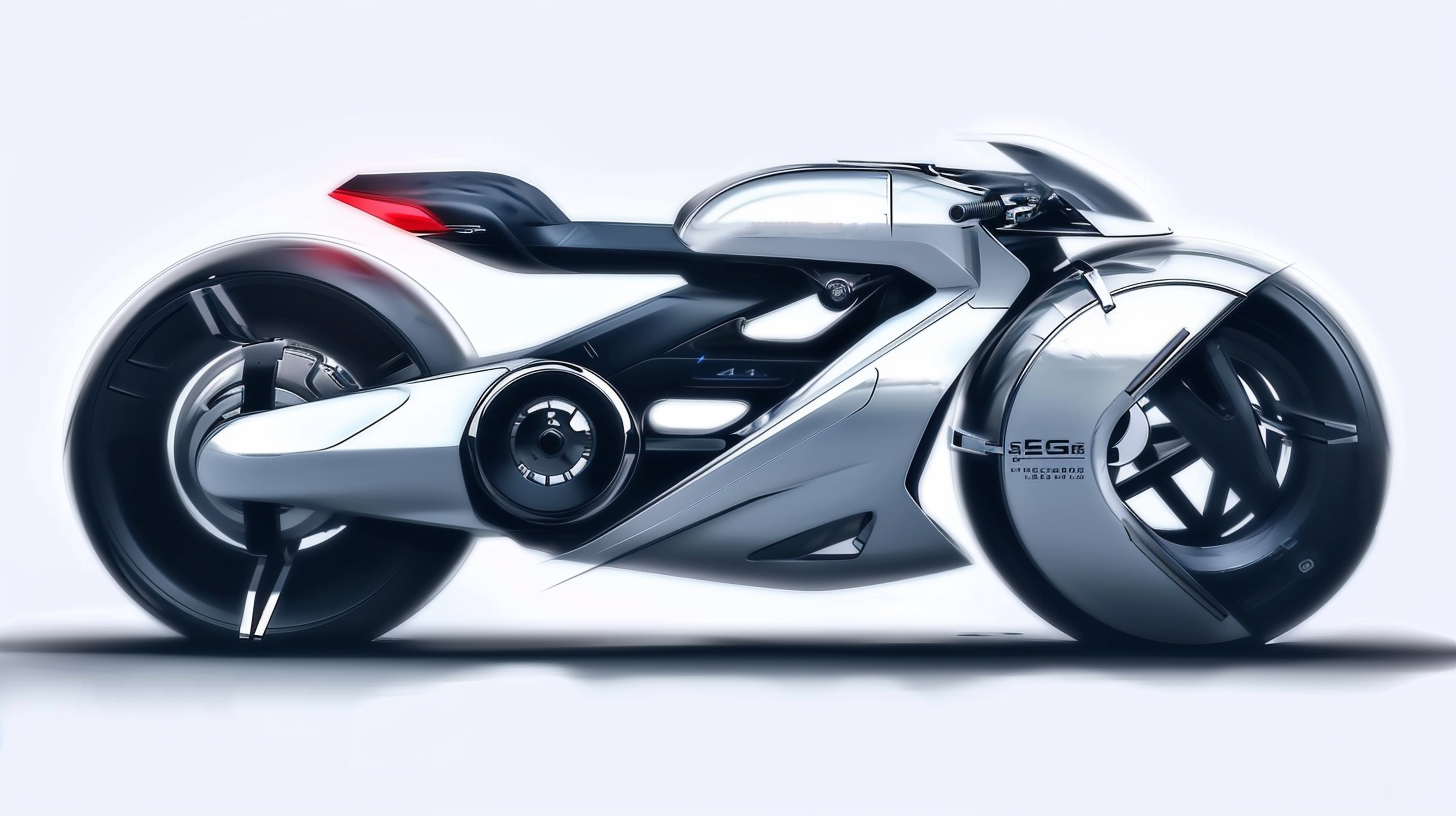

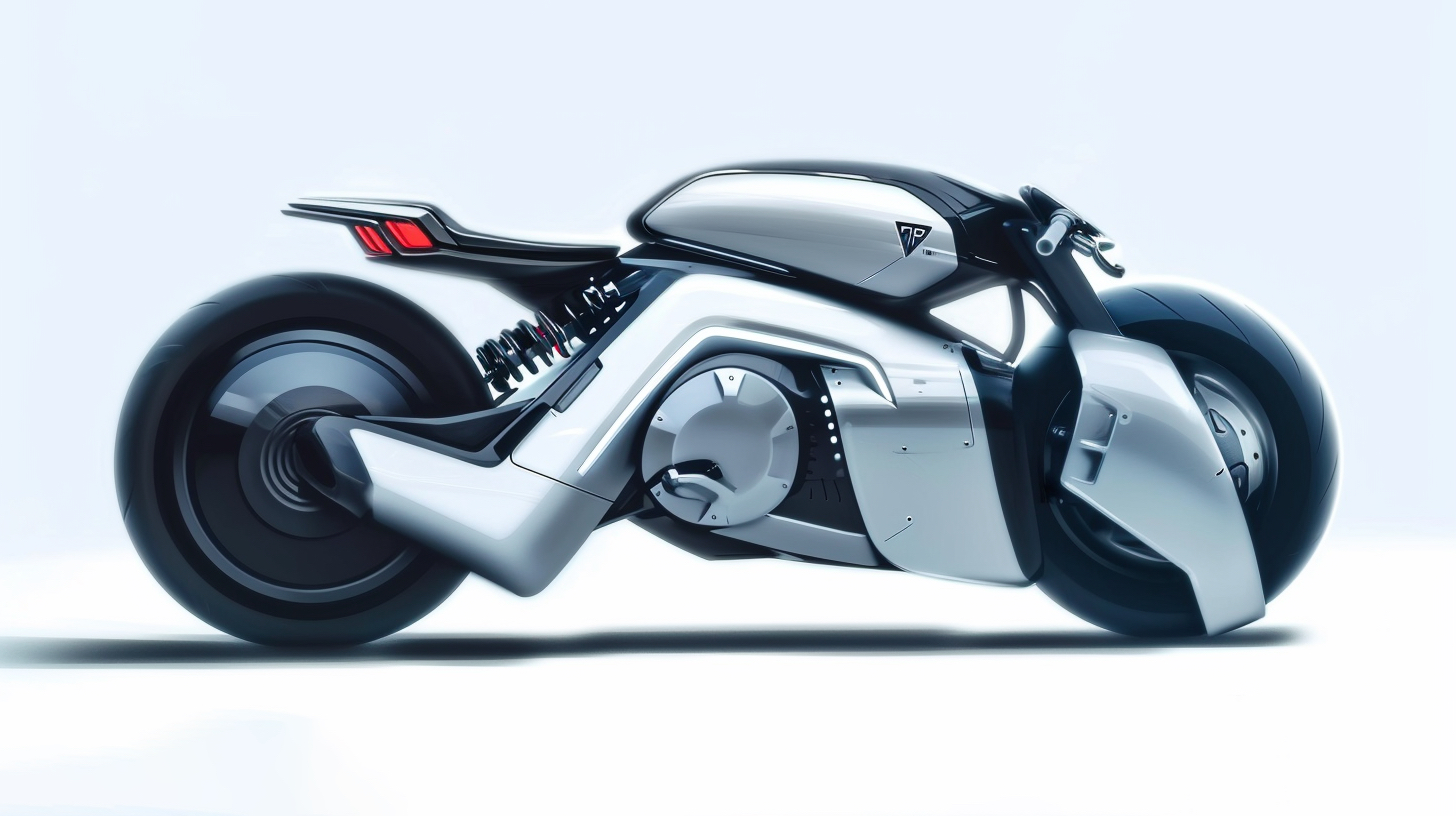

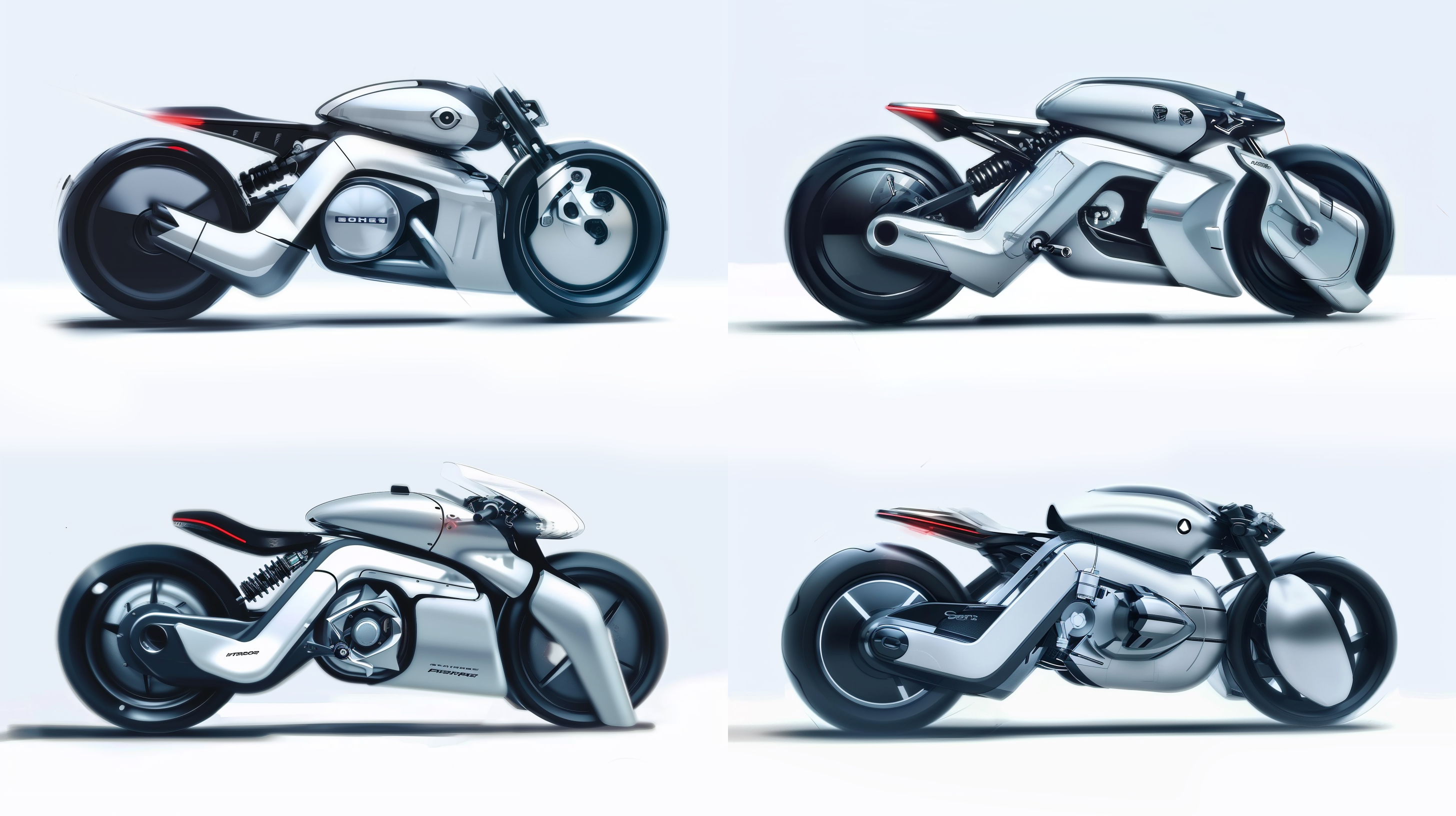

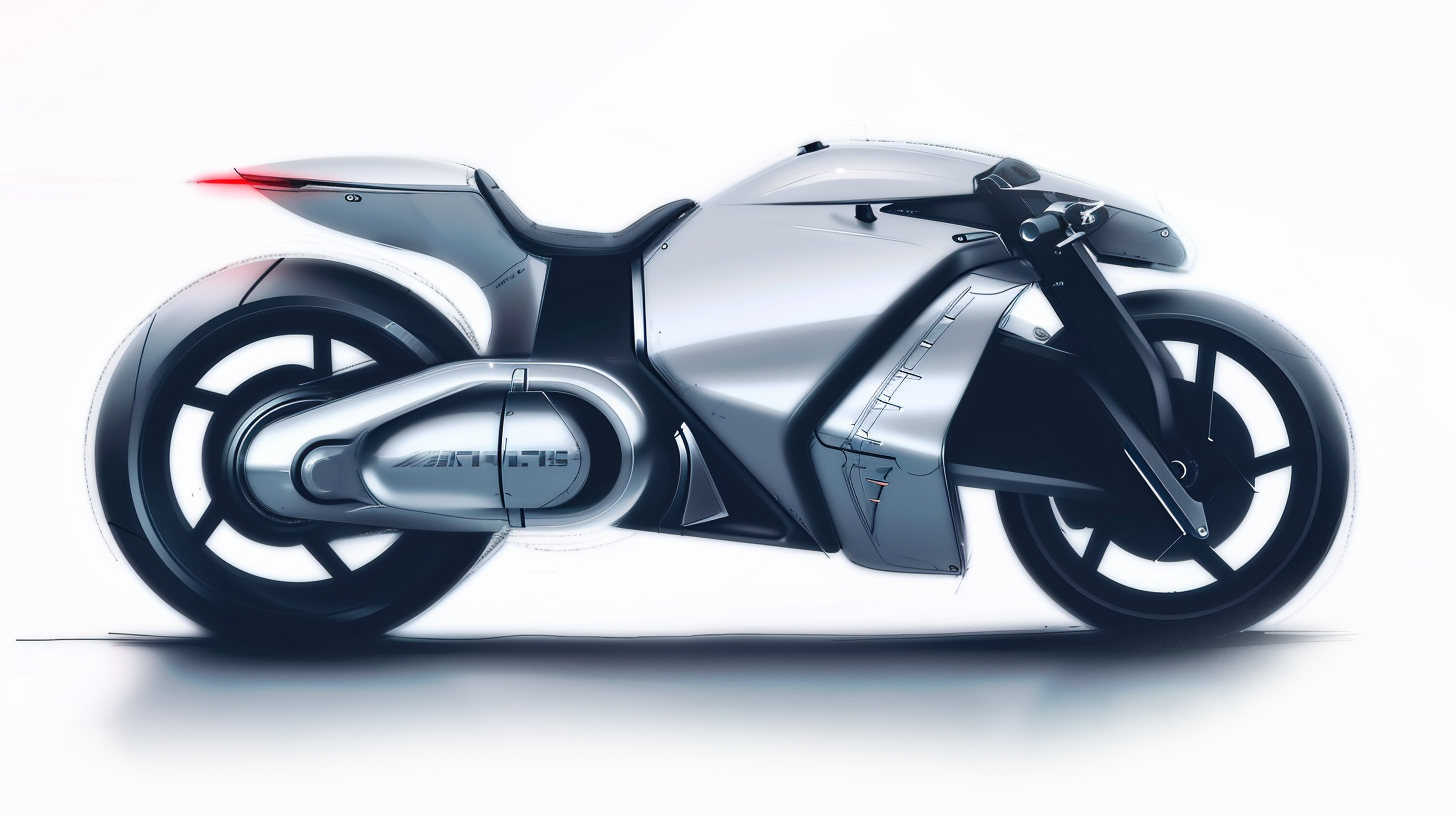

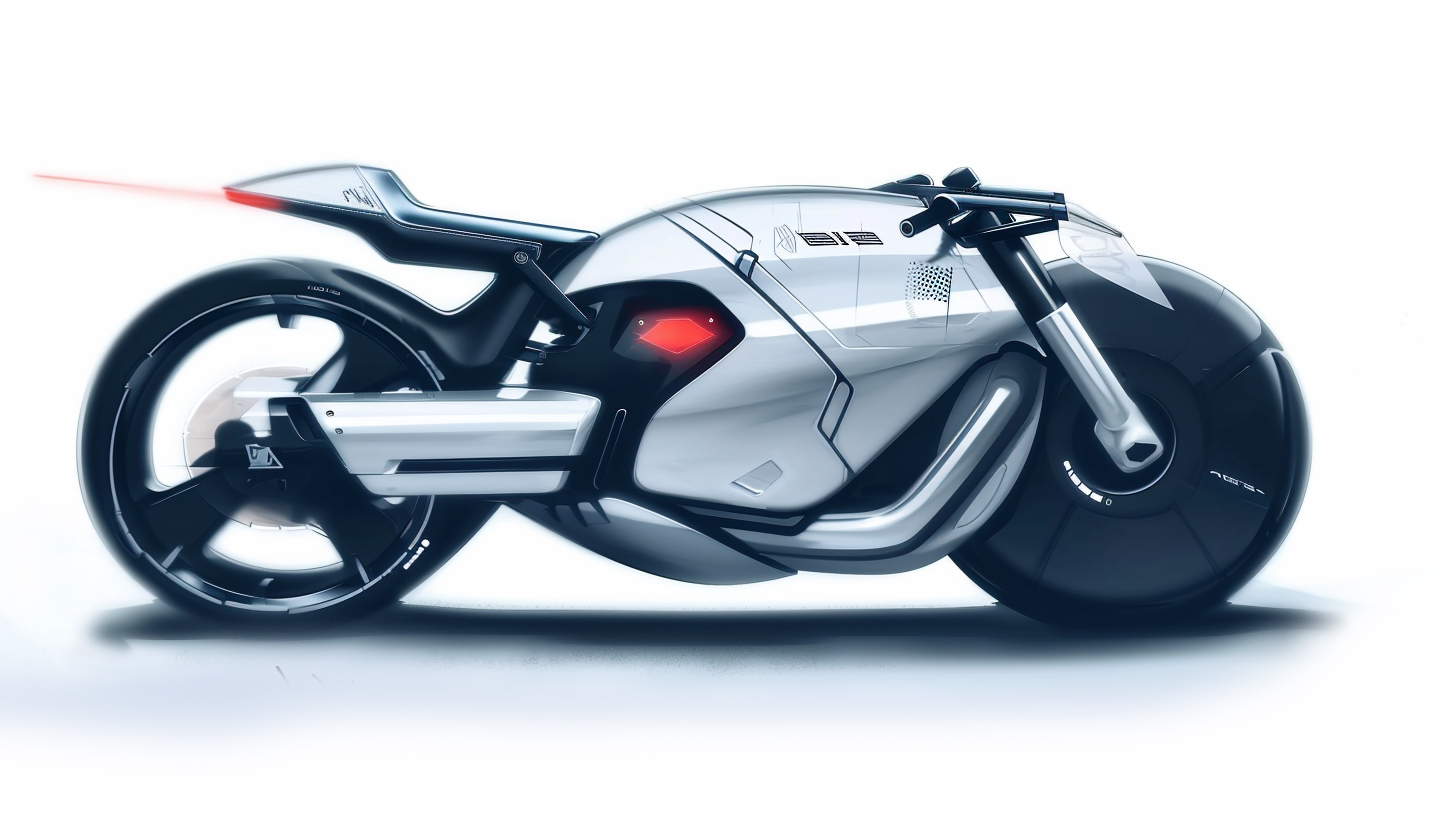

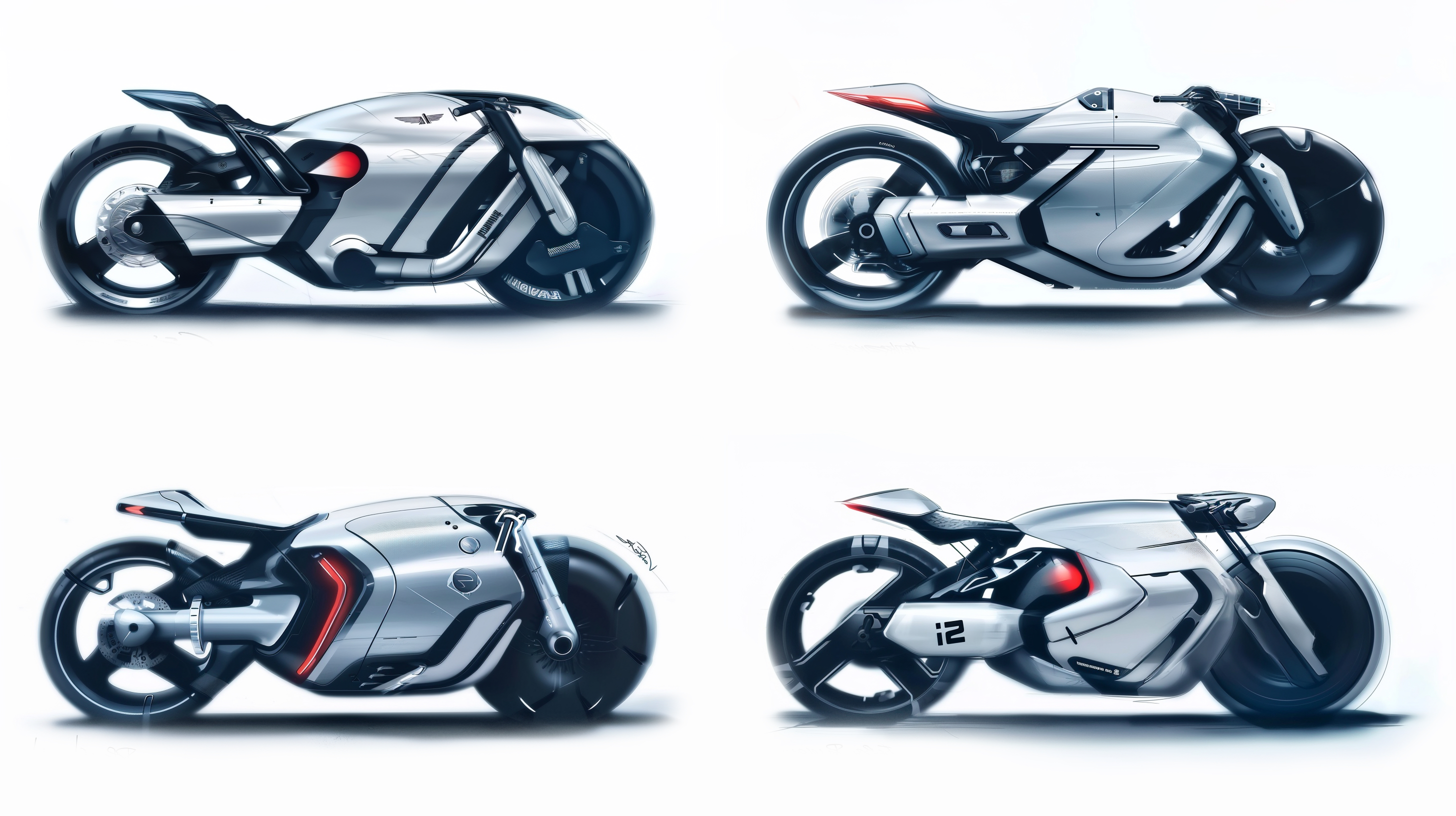

As AI tools continue to evolve and improve, it becomes increasingly possible to discover that AI can indeed change our traditional workflow.

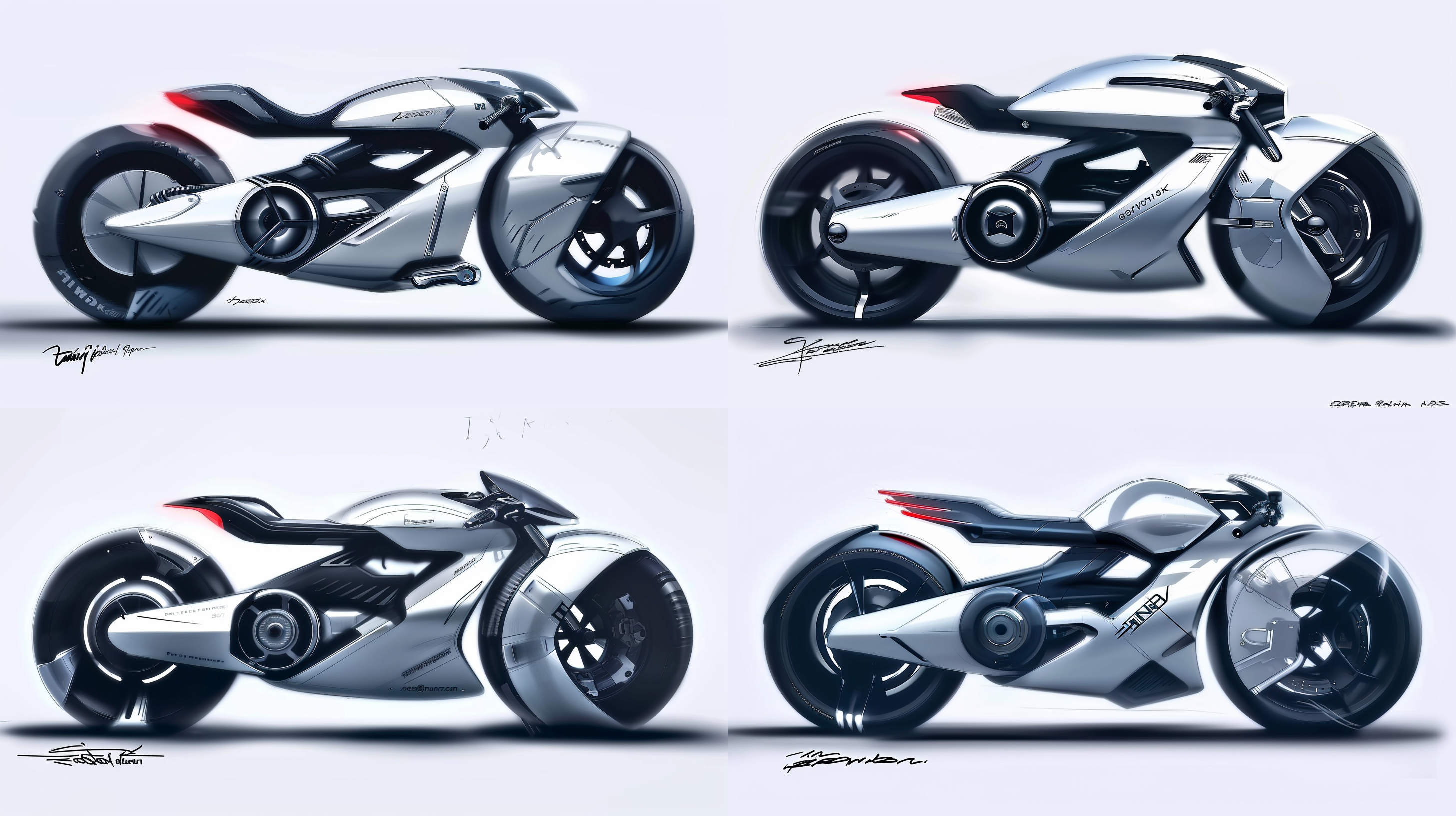

One of the questions you may encounter is "Why do I use the same keywords to get completely different pictures?" Today we will share.

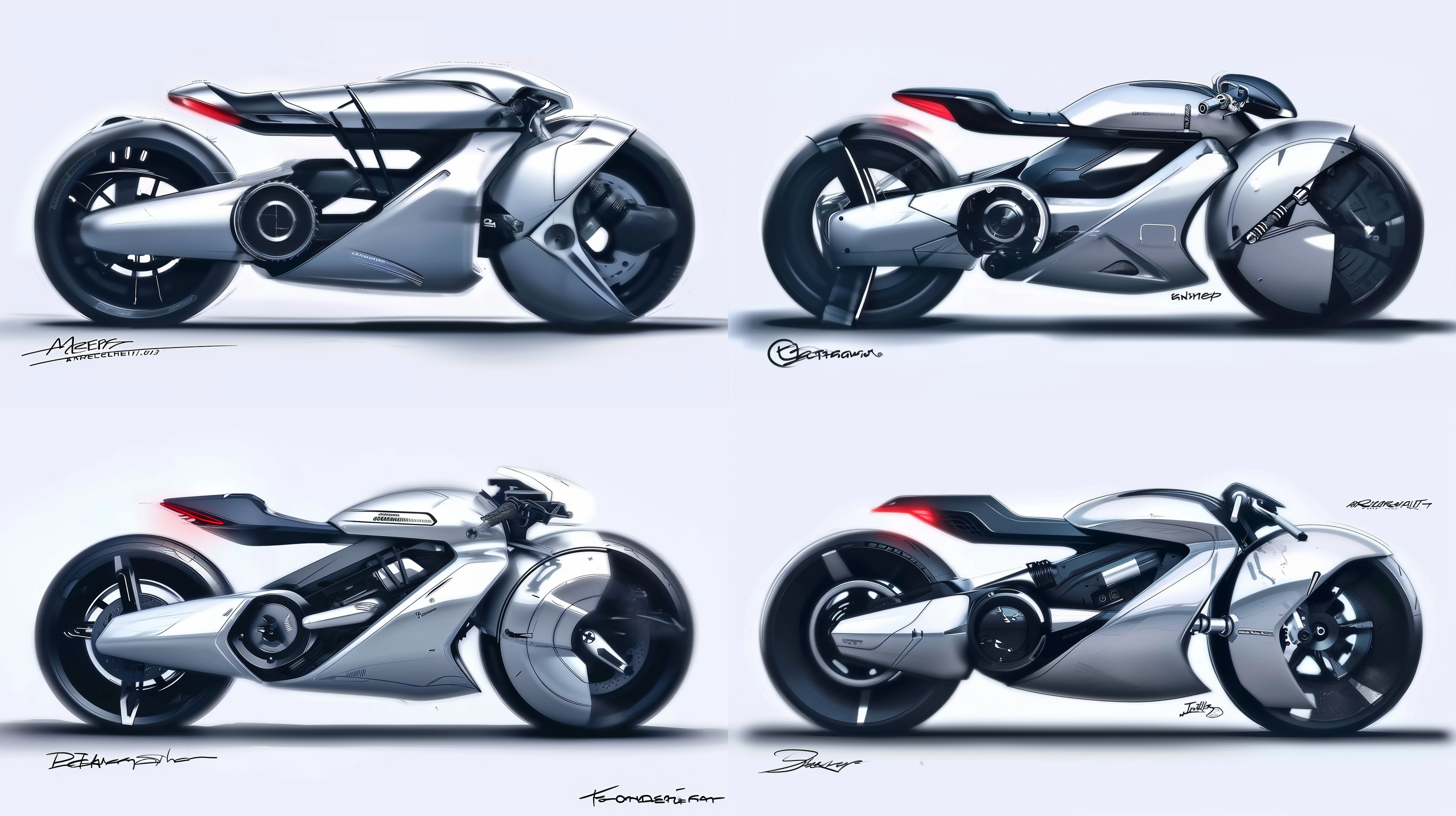

For different pictures, it is likely to be caused by these three factors:

1. Particles (seeds), particles have a strong influence on our picture. No matter you use stable diffusion/libleb/midjourney, there will be a factor involving particles. midjourney can get the number of particles through email, and then add -- seed XXXXX to the suffix with the same keyword to use this particle. As for stable diffusion, you can use the content in the generated information and copy all parameters, then paste it into the prompt box and distribute it. The number of particles will be imported together at this time without manually filling it in.

2. Samplers or large models are different, midjourney niji,v5.2,v6.0 and so on, because different sampling algorithms will also lead to different pictures, SD is the same reason, Euler A and so on will also affect the different pictures.

3. Mat map factor, sometimes we will use mat map in MJ, but when we copy other people's information, we do not have the information of other mat map, which will also lead to the deviation of the picture generated by our map. If we cannot find his map, we can only choose the category style we like and generate it as our own mat map.

The above is "why I use the same keywords out of the picture is completely different?" the reason!

The copyright of this work belongs to Aqun. No use is allowed without explicit permission from owner.

New user?Create an account

Log In Reset your password.

Account existed?Log In

Read and agree to the User Agreement Terms of Use.

Please enter your email to reset your password

so handsome

This painting is quite good